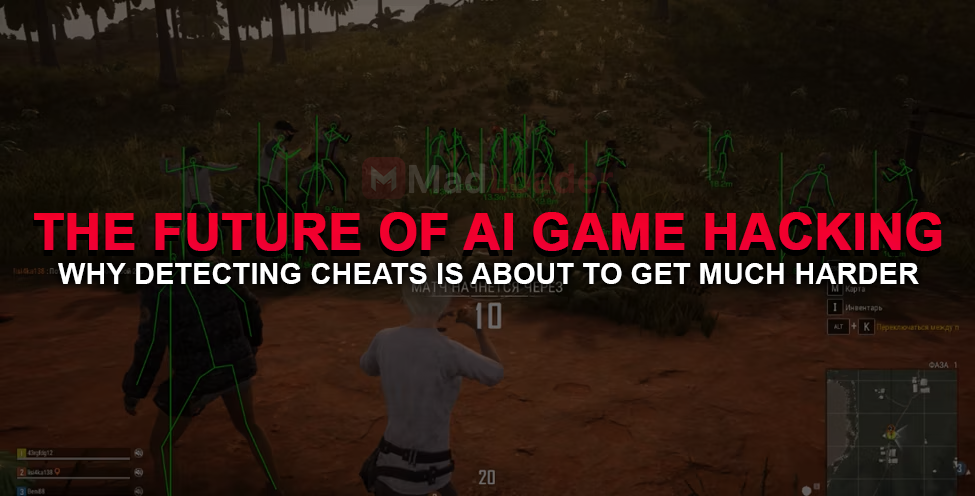

The Future of AI Game Hacking: Why Detecting Cheats Is About to Get Much Harder

For years, the battle between game developers and cheat makers has going on a predictable cycle. Game devs deploys new anticheat mechanisms, cheat devs finds weaknesses, and that cycle repeats. However, the rapid advancement of artificial intelligence (or ai) is fundamentally altering this dynamic. Traditional cheat detection models, signature scans, behavioral heuristics, serverside, analytics are being challenged by AI driven cheat systems that are adaptive, concealed, and increasingly indistinguishable from legitimate player behavior.

AI game hacking is not just another wave of cheats, it represents an architectural shift in how cheats are created and deployed. As a result, the difficulty of detecting malicious behavior is rising in ways that will define the next decade of game security.

AI cheats Are Becoming Behaviorally Human

Historically, cheats left identifiable footprints: impossible reaction times, perfect accuracy, deterministic movement, or predictable patterns. Anticheat systems could detect these annomalies through statistical thresholds or heuristic models.

AI, by contrast, learn to mimic human variability. Reinforcement learning models can train on thousands of gameplay hours to produce inputs that look natural and non deterministic. Instead of snapping instantly to targets, an AI assisted aimbot might approximate the way a human tracks motion, complete with micro corrections, slight delays, and even simulated mistakes.

The consequence is profound: behavioral analysis, once a reliable line of defense, loses significant discriminatory power.

AI Can Dynamically Evade Detection

Unlike traditional programs that operate on fixed logic, AI driven cheat systems can reconfigure themselves in real time. If an anticheat update blocks one behavioral pathway, the model can adjust its output to stay within acceptable ranges. Some systems may soon incorporate selfdiagnostic layers that test whether their behavior triggers suspicion, then automatically self-tune to avoid detection.

This is an evolutionary capability. Anticheat solutions are built reactively, but AI cheats are moving toward continuous, autonomous adaptation.

Anticheat Systems Face a Data Disadvantage

To build effective machine learning models, anticheat developers need examples of both legitimate and illicit gameplay patterns. However, as AI cheats become increasingly customized and locally trained by individuals, the diversity of malicious behavior expands dramatically.

Developers no longer face a handful of widely distributed cheat binaries. instead, they confront a landscape of millions of personalized models that differ in timing, targeting logic, and execution. This creates a moving-target problem:

- There is no single signature to detect.

- There is no centralized dataset of malicious behavior.

- There is no consistent pattern that distinguishes cheating from high-skill play. This dramatically increases the cost and complexity of maintaining robust detection frameworks.

The Line Between Assistance and Cheating Is Blurring

AI also introduces a philosophical dilemma for detection teams. what counts as a cheat?

Tools that provide realtime suggestions, predictive pathing, or strategical insights may not directly manipulate player input. They assist without taking full control, and some operate entirely outside the game client (e.g., via computer vision overlays that never touch memory). Distinguishing between benign accessibility tools, AI assisted coaching, and covertly automated gameplay is becoming an ambiguous policy challenge.

In an industry that depends heavily on clear rules of fair play, ambiguity becomes an exploitable weakness.

The Future: A New Era of Security Architecture

To meet these challenges, the game industry will need to transition from static anticheat strategies to more holistic security models. Future approaches may include:

- Serverside behavioral correlation, where gameplay events are modeled at population scale to identify statistical impossibilities.

- Trusted execution environments, limiting the ability of external models to inject or interpret data.

- Realtime model watermarking, to detect AI like behavioral traces.

- Continuous authentication systems, validating players through identity linked or biometric interaction patterns. None of these solutions will be simple or inexpensive to implement. But the alternative, allowing AI-driven cheats to erode competitive integrity, is even more costly.

In conclusion

AI game hacking represents a paradigm shift. As machine learning models become more sophisticated, more personalized, and more human like in their decision patterns, the traditional methods of cheat detection will lose effectiveness. Developers must prepare for a future where cheats are not simple scripts but adaptive agents capable of evolving faster than the systems designed to stop them.

The race between cheats and anticheat measures has always been adversarial. With AI entering the arena, that race is accelerating, and the margin for error is shrinking.

If AI cheats exist, why not AI anticheat? In the next post, we will take a look at the AI anticheat experiment created by Basically Homeless and what it reveals about the future of automated detection.