If AI cheats exist, why not AI anti-cheat?

Inside Waldo, the AI anticheat developed by Basically Homeless

In Summary: YouTuberr Basically Homeless released an experimental visual anticheat called Waldo that analyzes gameplay footage with deep vision models (Vision Transformers) to score clips for likely cheating. It runs locally, operates on short killshot clips, provides frame level heatmaps and confidence scores, and is intended as an opt in investigative/research tool rather than a turnkey commercial anticheat.

Introduction: a simple question, an important idea

If AI is being used to build sophisticated cheats, why isn’t AI used to stop them? Waldo answers that question by shifting the detection surface from client memory and input hooks to the rendered video stream. By treating the game’s output as the data source and applying modern video vision models, Waldo attempts to identify behavioral signals consistent with automated aim or assistance while offering explainability through frame-level attention maps.

This approach reframes detection as a visual, auditable task, one that can complement existing anticheat stacks rather than replace them.

Who built Waldo?

Basically Homeless (online handles: BasicallyHomeless on YouTube, Mr-Homeless on GitHub) is an independent creator and researcher known for provocative demos and tooling around gaming, input, and accessibility. Waldo is published as an experimental repo and accompanied by demonstration videos. The project is intended for research and investigation rather than immediate production deployment.

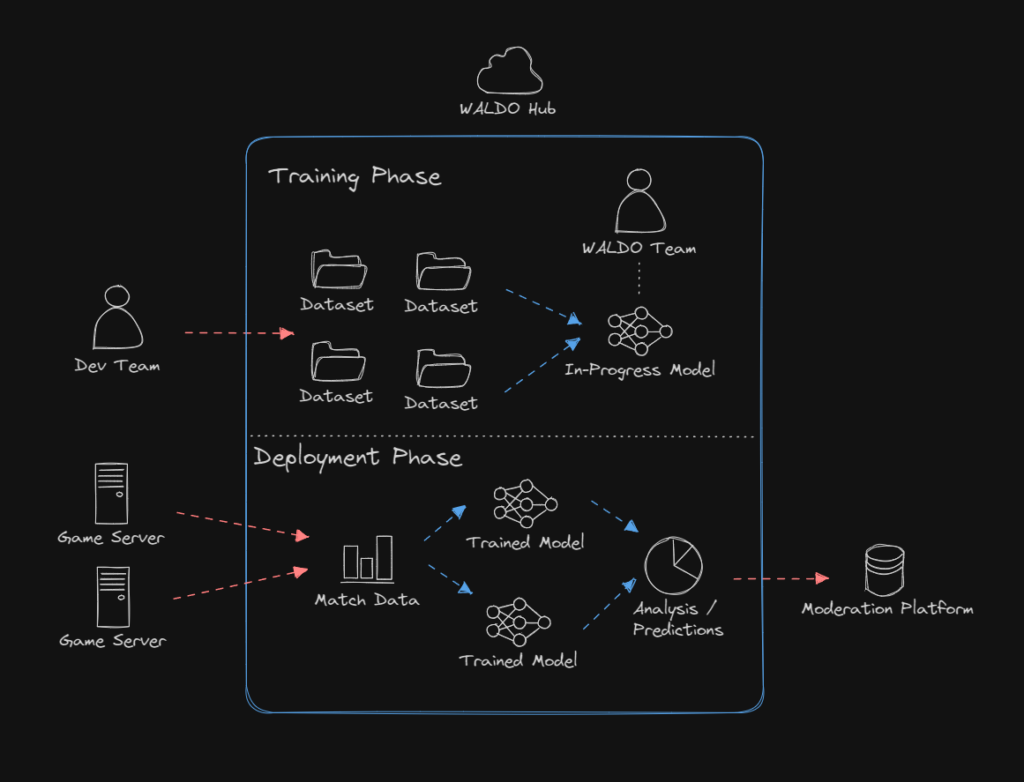

How Waldo works in technical summary

Waldo’s pipeline is conceptually straightforward and focused on explainability:

- Data source: rendered gameplay video or short, automatically extracted kill-shot clips.

- Preprocessing: isolate events of interest (kills); crop around the crosshair/center region; standardize frame stacks.

- Model architecture: transformer-based video/vision models (e.g., VideoMAE / Vision Transformer variants) fine-tuned on labeled examples of suspicious versus legitimate clips.

- Inference output: per clip confidence score (range defined by the implementation), per-frame attention/ROI heatmaps, and optional JSON exports for downstream triage or aggregation.

- Deployment model: local, opt-in execution capable of running on user machines for investigation; models and code are open for audit and retraining. The decision to analyze rendered frames provides two practical benefits, it is less invasive than kernel level hooks, and it yields human interpretable visualizations that investigators can inspect.

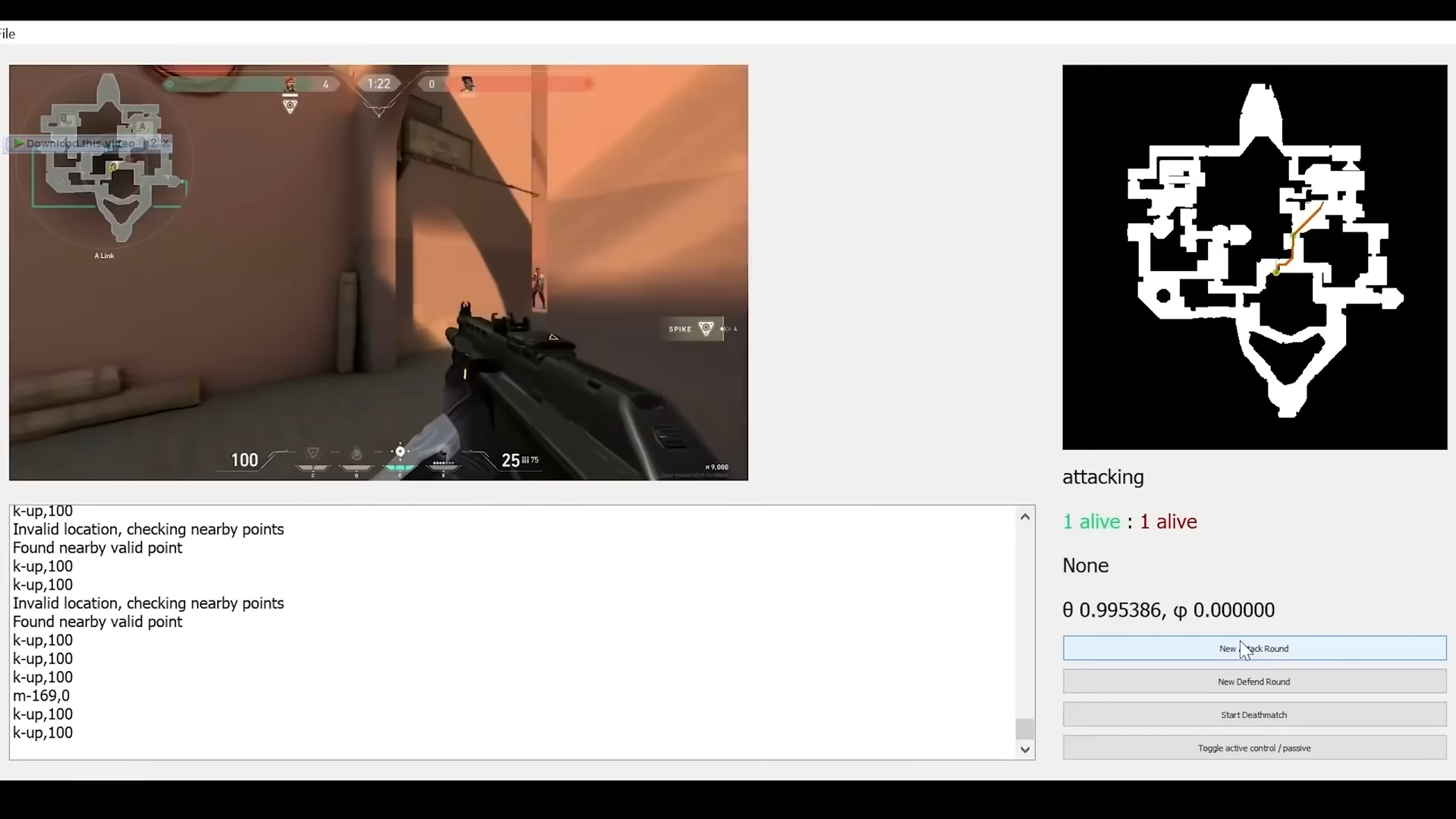

Demo findings and practical behavior

Public demos show Waldo classifying uploaded clips and returning a short report. a confidence metric plus an attention overlay that highlights which frames or objects influenced the decision. In controlled examples, the model differentiates clear examples of automated aim from casual play. However, the demos also make clear where uncertainties remain:

- Highskill human players occasionally trigger high confidence scores.

- Compression artifacts, HUD overlays, or camera/view settings change model responses.

- The model’s thresholds and labels depend heavily on the training dataset. Because the repository is open, researchers can reproduce experiments and explore model behavior across different data distributions.

Strengths

- Non-invasive: operates on visual output, avoiding client memory inspection or kernel drivers.

- Explainability: frame level visualizations make it easier to justify flags and enable human triage.

- Auditability and research value: an open repo allows independent validation and model improvement.

- Modular: can be integrated as an auxiliary signal into multi layer anticheat architectures (investigation, evidence aggregation).

Limitations and risks

- False positives: exceptional human play, stream overlays, or specific camera angles may be misclassified. Flags should not be used alone for punitive actions.

- Training bias: limited or skewed datasets will produce brittle models that do not generalize across maps, weapons, or playstyles.

- Adversarial countermeasures: visual detection can be evaded by modifying HUDs, smoothing inputs, or adding adversarial perturbations to the rendered frames.

- Operational scale: transformer-based video models are computationally heavy; real-time, fleet-scale inference is costly.

- Privacy considerations: gameplay clips may contain personally identifying information (voice, faces, chat), so consent and proper data-handling policies are required.

Ethical, legal, and policy considerations

Any deployment or research use of visual anti-cheat requires clear policies:

- Consent & transparency: players must be informed and opt in where possible when gameplay footage will be analyzed.

- Human in the loop: automated scores should trigger review workflows; bans or sanctions require investigator verification.

- Data retention & minimization: keep only the evidence necessary for triage, and implement pseudonymization or limited retention windows.

- Research disclosure vs. abuse: publishing detection tools helps defenders but also allows attackers to probe and adapt, balance openness with responsible disclosure.

Where visual AI belongs in an anti-cheat stack

Visual AI is best deployed as a complementary signal in a layered defense strategy:

- Prevention layer: secure client, trusted execution, and server-side sanity checks.

- Detection layer: telemetry and behavioral analytics combined with visual AI for explainable evidence.

- Investigation layer: human triage, cross-correlation of visual findings with server logs and inputs.

- Remediation layer: graduated responses based on multi-source evidence and human review. When used responsibly, visual models increase the evidence available to investigators and can help prioritize manual review in large scale operations.

In Conclusion

Waldo highlights a practical, explainable direction for AI assisted cheating detection by treating rendered gameplay as a data source. It demonstrates the potential for non invasive, auditable detection signals especially valuable for incident investigators and researchers. However, model limitations, privacy concerns, and adversarial dynamics mean visual AI should augment, not replace, comprehensive anticheat architectures.

Links: Waldo Official Site Saving FPS Games - AI Anti-Cheat YT Video